LLM (Large Language Model):Unlocking New Possibilities for Enterprise AI Applications

LLM (Large Language Model):

Unlocking New Possibilities for Enterprise AI Applications

The application of AI is flourishing, gradually transforming how businesses operate. Basic AI applications can no longer meet the growing diversity of business needs. With continuous technological advancements, many innovative technologies and applications have emerged, and LLM (Large Language Model) have become one of the key technologies driving AI applications to a higher level.

Key Capabilities of LLM: Versatility, Advanced Learning, and Cross-Domain Applications

The key capabilities of LLM lies in their comprehensive and versatile abilities, including :

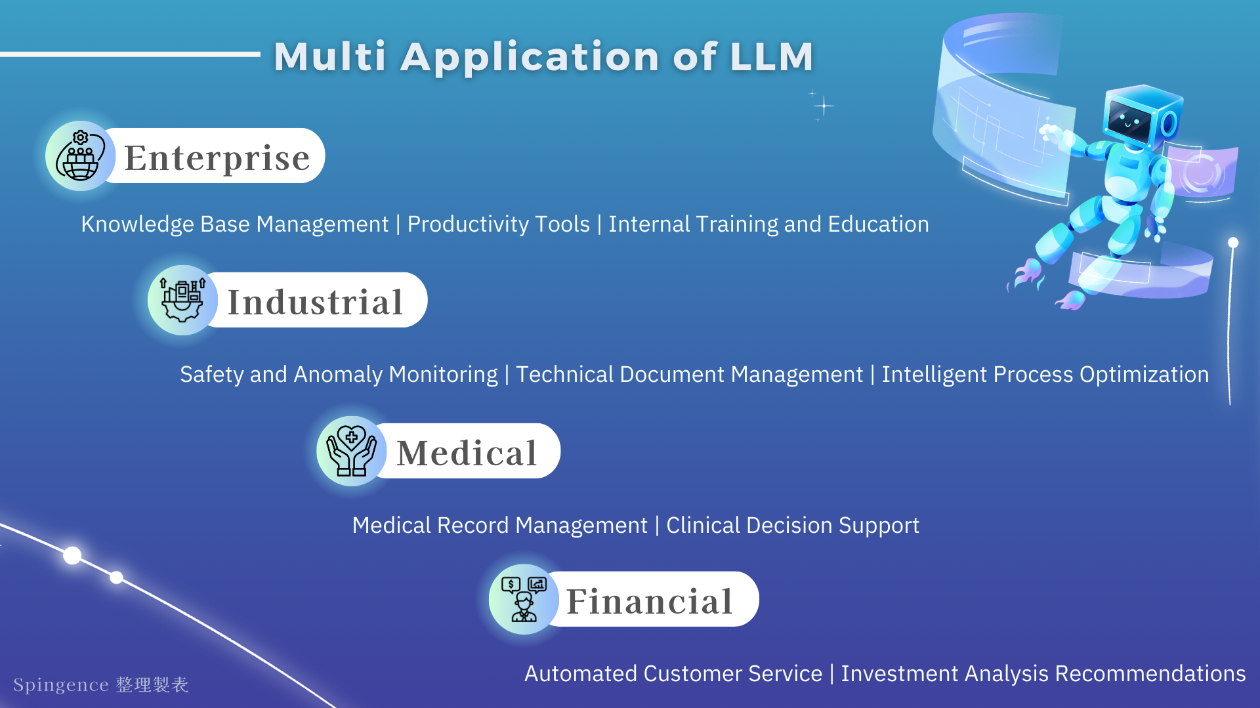

● Multifunctionality:LLM can handle a wide range of tasks and are not limited to a single function. Examples include text translation, content summarization, and content generation.

● Pre-training of Models:LLM are pre-trained on vast amounts of text data to learn the patterns and structures of human language, enabling more logical understanding and expression.

● Learning and Reasoning:LLM possess logical reasoning abilities, allowing them to provide suggestions or solve problems based on input data.

● Cross-Domain Application:LLM is adaptable to various fields, such as industrial applications, healthcare, finance, education, and more.

● Adaptability:Beyond answering specific questions, LLM can address unfamiliar problems by retrieving relevant information from their knowledge bases to provide accurate responses.

● Context-sensitive ability:LLM understands the context of input text and can generate coherent responses that maintain thematic consistency throughout the interaction.

Choose the LLM that suits your needs and help your organisation to improve its efficiency.

The rapid and diverse development of LLM technology has led to widespread applications and the growth of various model types. The scale of an LLM typically depends on the size of its training dataset and the number of parameters. Currently, commonly used LLM models for businesses are primarily available in four specifications: 7B, 13B, 33B, and 70B.

For different business requirements, companies can flexibly choose the appropriate model scale. For example, smaller models like 7B and 13B require fewer computational resources and are better suited for basic applications, such as automated customer service, simple text generation, or preliminary data summarization. These lightweight models are ideal for tasks requiring high real-time performance with limited resources.

On the other hand, larger models like 33B and 70B offer stronger semantic understanding and content generation capabilities. They are suitable for more complex tasks, such as cross-domain applications, research and development, or generating technical documents. These larger models excel at handling context-rich content, making them ideal for tasks in professional and specialized fields.

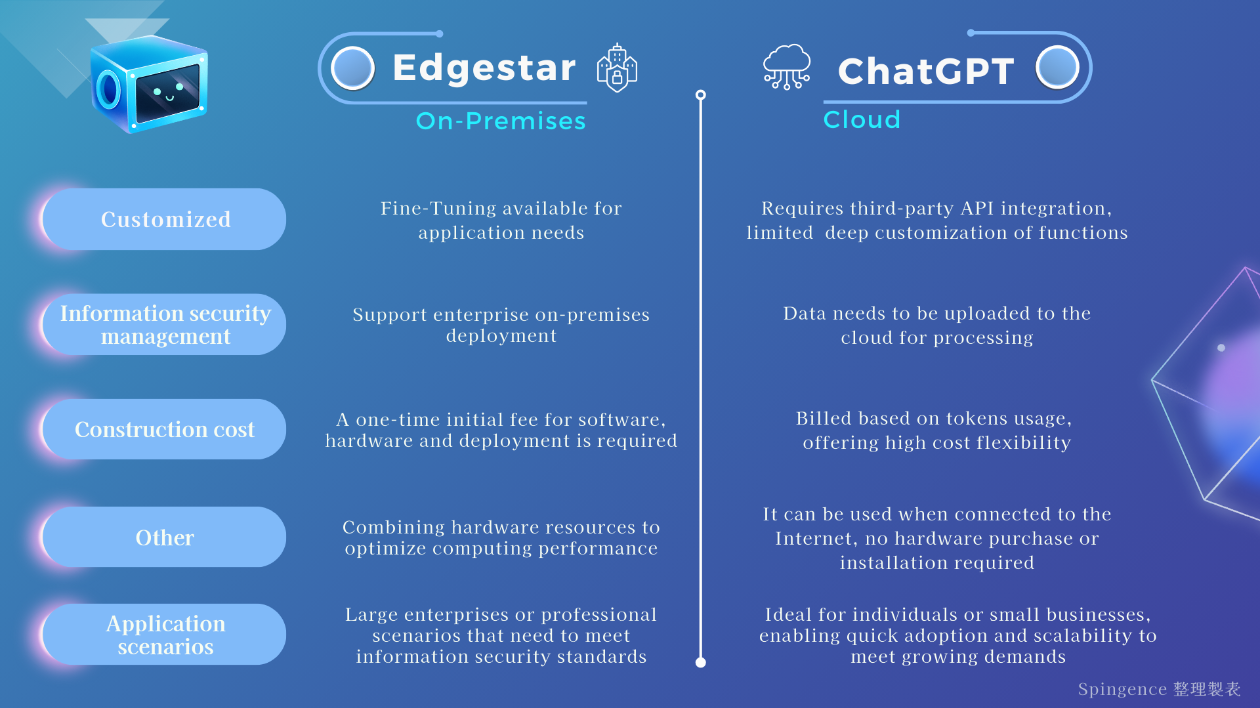

In addition to model size, LLM can also be categorized by deployment modes: on-premises and cloud-based solutions. ChatGPT, as a representative of cloud-based solutions, contrasts with Edgestar, the on-premises solution developed by Spingence. These two deployment types have distinct characteristics and use-case scenarios, enabling businesses to choose the best option based on their specific needs.

Edestar is a specially designed LLM software-hardware integration solution tailored for enterprises, deployed on-premises to meet the high standards of data privacy and localized application needs. The key features include:

● Data Privacy Protection: Keeps all enterprise data securely within the internal environment, ensuring data security and privacy.

● Software-Hardware Integration: Combines Advantech servers and Phison’s aiDAPTIV+ technology to enhance the stability of the LLM while optimizing system performance.

● Flexibility and Customization: Scpingence offers thea ageendt enabling businesses to select appropriate application modules for further development based on business needs. Additionally, businesses can fine-tune models to better match specific use cases.

This solution provides enterprises with a robust, secure, and adaptable platform for AI-powered applications while addressing data privacy concerns.

Colud-based Solution | ChatGPT

As a widely recognized cloud-based solution, ChatGPT significantly lowers the barriers to using LLM (Large Language Model) technology. Its core features include:

● Quick Deployment:There is no need to purchase additional hardware; users can simply connect via the internet to start using the service.

● High Scalability:ChatGPT supports multiple languages and fields, making it easy to meet global demands.

● Model Iteration:Businesses automatically have access to the latest version of the LLM model, ensuring they benefit from continuous improvements and updates.

This cloud solution provides businesses with a flexible, cost-effective, and scalable way to implement LLM technology without the need for heavy infrastructure investments.

For individuals or small businesses, cloud-based solutions like ChatGPT are ideal due to their quick deployment and lower cost. These solutions provide the flexibility of easy access via the internet without the need for additional hardware. In contrast, medium to large enterprises, or those with strict data security concerns, are better suited for on-premises solutions like Edgestar. These allow for greater control over data privacy and can be customized to better align with specific use cases.

Both cloud and on-premises solutions have their unique advantages. Cloud solutions offer high scalability and ease of deployment, while on-premises solutions provide more control over data and integration. Businesses can evaluate and choose the right option based on their technical capabilities, requirements, and application scenarios. The powerful language understanding and generation capabilities of LLM continue to be widely applied across various industries, helping businesses improve efficiency and competitiveness.

Recommended Reading:Understand How the Edgestar AI Agent Library Can Address Various Business Use Cases