Enhancing Enterprise Inference Efficiency: Choosing the Most Suitable LLM Inference Service

Enhancing Enterprise Inference Efficiency: Choosing the Most Suitable LLM Inference Service

In the wave of digital transformation, the demand for large language models (LLMs) among enterprises is rapidly increasing. From customer service automation to content generation, data analysis, and decision support, LLMs are becoming an essential foundation for enterprise intelligence. However, to maximize the potential of these powerful AI models, enterprises need not only strong computing resources but also an efficient and stable inference solution to ensure smooth LLM operations and prevent high latency from affecting user experience.

Challenges in Enterprise AI Applications: Bottlenecks in LLM Inference

While LLMs have powerful natural language processing capabilities, their operation involves a large amount of computation, which, if not properly optimized, can lead to:

- Slow inference speed: When users input queries, AI responses take more than 5 seconds, severely affecting real-time interaction experience.

- High resource consumption: Traditional inference methods may lead to excessive GPU/CPU load, increasing operational costs.

- Difficulty in supporting high concurrency: When numerous users request AI services simultaneously, the system may experience delays or be unable to handle the load.

How LLM Inference Services Solve Inference Efficiency Issues

1. What is LLM Inference Service?

LLM Inference Services are designed to enhance inference speed and reduce resource consumption. These services optimize the operation of LLMs using specialized technologies, enabling faster and more stable AI service delivery. These technologies include:

- Dynamic Batching: Enhances concurrency and improves throughput.

- Memory Optimization Techniques (such as PagedAttention): Reduces memory usage during the inference process and supports long-text processing.

- GPU-Accelerated Architecture Design: Maximizes GPU efficiency, reducing inference latency.

2. Popular LLM Inference Service Options

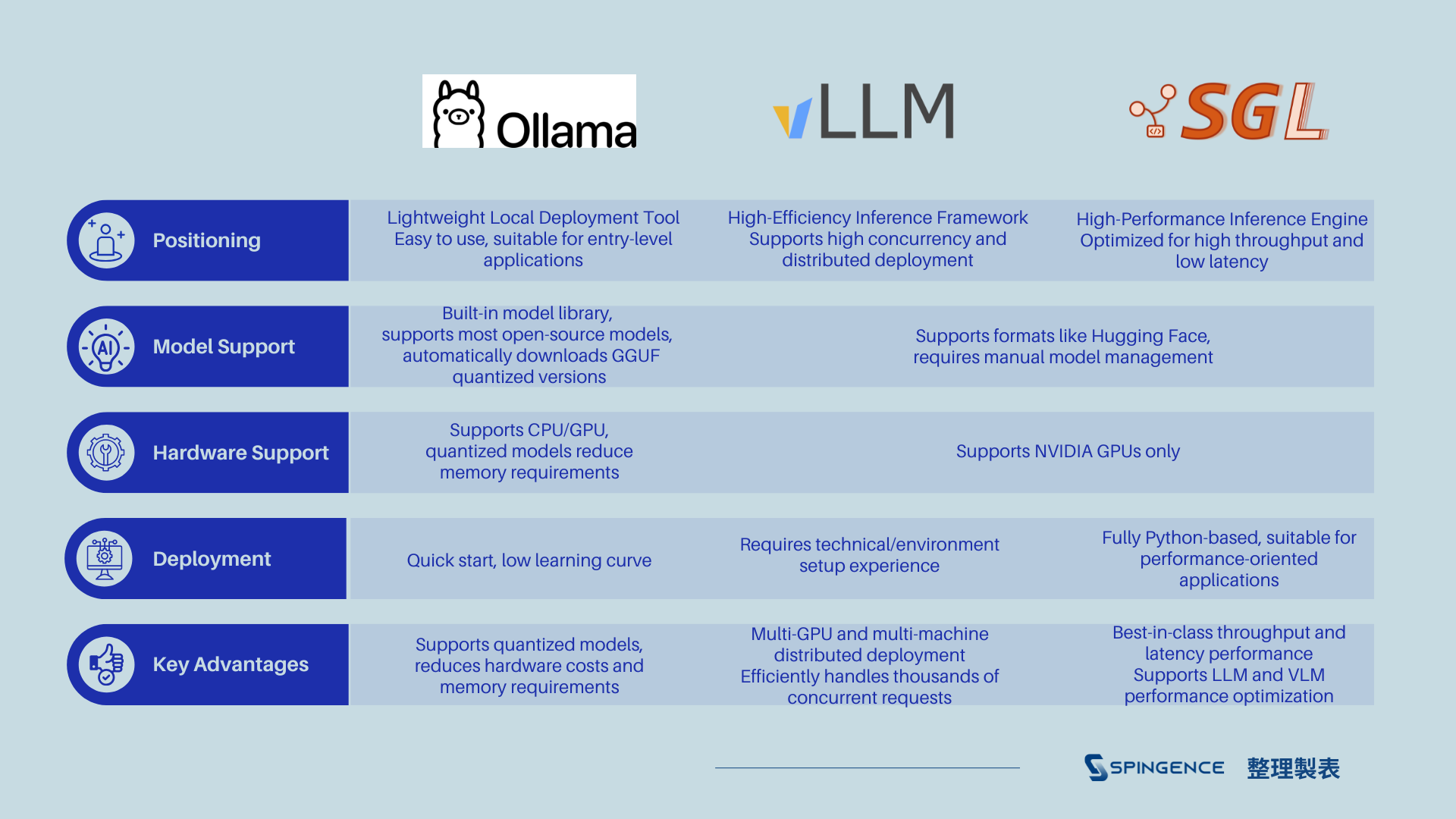

There are many tools available on the market for selecting an LLM inference solution. We have compiled three well-known inference frameworks: Ollama, vLLM, and SGLang, each with its unique features suitable for different business needs.

- Ollama: If you need to quickly deploy an LLM for prototype development or internal testing, Ollama is a simple and easy-to-use open-source deployment tool that enables you to get up and running in the shortest time.

- vLLM: When your business needs to support large-scale requests and high-concurrency processing, vLLM provides optimized inference performance to ensure high throughput and low latency, making it an ideal choice for API services.

- SGLang: For multi-modal AI tasks, SGLang supports heterogeneous hardware and can handle more complex data flows, making it suitable for enterprise AI applications that require scalability.

- If you want to quickly deploy a local LLM application 👉 Ollama

- If you need high-performance inference services (such as API batch requests) 👉 vLLM

- If you want to develop complex multi-modal AI tasks (such as image-text, OCR) 👉 SGLang

Edgestar: Making Enterprise LLM Deployment Easier

Let Spingence help you break through the AI inference bottleneck and accelerate business innovation! 💡